If the degree of correlation is high enough between variables, it can cause problems when fitting. We now need to tell r what our linear regression model is; Pd.set_option('display.max_columns', 8) print('== inverted correlation matrix ==') print('') print(pd.dataframe(np.linalg.inv(ivar.corr()), index=ivar.columns, columns=ivar.columns))

multicollinearity Identifying variables contributing to

One method for detecting whether multicollinearity is a problem is to compute the variance inflation factor, or vif.

In the presence of multicollinearity, the solution of the regression model becomes unstable.

The categorical variables are either ordinal or nominal in nature hence we cannot say that they can be linearly correlated. Therefore, determinant of normalized correlation matrix (r = x0x) without intercept can be used to This article talks about how we can correct multicollinearity problem with correlation matrix. Multicollinearity page 1 of 10 perfect multicollinearity is the violation of assumption 6 (no explanatory variable is a perfect linear function of any other explanatory variables).

Ivar = data[['t1y', 't10y', 'hyield', 'cpi', 'ppi', 'oil', 'indpro', 'pce']] in:

• if multicollinearity is present in the predictor variables, one or more of the eigenvalues will be small (near to zero). Variance inflation factor (vif) is used for detecting the multicollinearity in a model, which measures the correlation and strength of correlation between the independent variables in a regression model. R programming server side programming programming. Multicollinearity refers to a situation in which two or more explanatory variables in a multiple regression model are highly linearly related.” we saw an example of exactly what the wikipedia definition is describing.

This correlation is a problem because independent variables should be independent.

In caret package, there is a function called findcorrelation that helps to identify correlated variables. It means that independent variables are linearly correlated to each other and they are numerical in nature. Multicollinearity occurs when independent variables in a regression model are correlated. In order to run the other multicollinearity diagnostics, we need to load the olsrr package (you may have to install this first if you have not used it before).

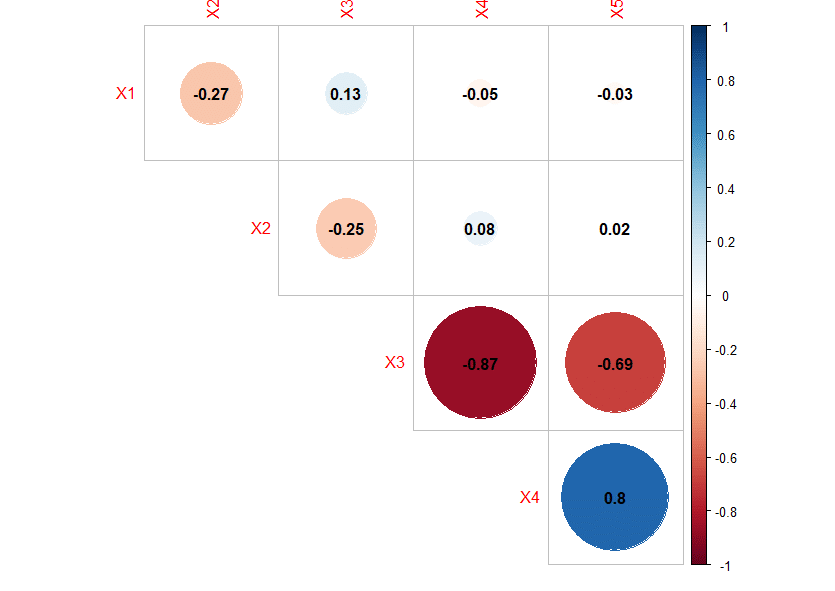

But multicollinearity can also occur between many variables, and this might not be apparent in bivariate scatterplots.

Multicollinearity is a term used in data analytics that describes the occurrence of two exploratory variables in a linear regression model that is found to be correlated through adequate analysis and a predetermined degree of accuracy. The matrix x0x will be singular if it contains linearly dependent columns or rows. Overall collinearity diagnostic measures • determinant: However, this cannot be considered as an acid test for detecting multicollinearity.

• let λ 1………λp be the eigenvalues of correlation matrix.

| 1 r x 1 x 2 r x 1 x 3 r x 1 x 2 1 r x 2 x 3 r x 1 x 3 r x 2 x 3 1 |. There is an extreme situation, called multicollinearity, where collinearity exists between three or more variables even if no pair of variables has a particularly high correlation. Perfect multicollinearity occurs when one independent variable is an exact linear combination of other variables. How to calculate variance inflation factor (vif) in r.

The column rank of a matrix is the number of linearly independent columns it has.

Multicollinearity is a special case of collinearity where a strong linear relationship exists between 3 or more independent variables even if no pair of variables has a high correlation: Correcting collinearity with correlation matrix in r. Suggest that some of the predictors are at least moderately marginally correlated. This means that there is redundancy between predictor variables.

By jim frost 185 comments.

Multicollinearity in regression analysis occurs when two or more predictor variables are highly correlated to each other, such that they do not provide unique or independent information in the regression model. Computes different measures of multicollinearity diagnostics for each regressor in the design matrix x. The condition number of correlation matrix is defined as k = √(λmax / λmin) & The standardised determinant for three variables case is written as.

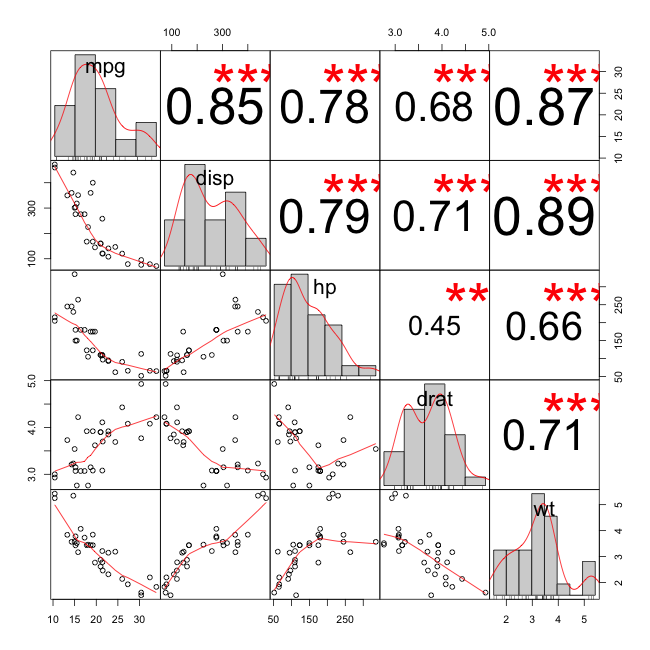

As we've seen, a scatterplot matrix can point to pairs of variables that are correlated.

That is, | 1 1 1 1 1 1 1 1 1 | = 0. Multicollinearity test done only on independent variables. This is a measure of how much the standard error. Now in case of perfect multicollinearity the simple correlation coefficients are equal to unity and so the above determinant turns to zero.

All the variables having vif higher than 2.5 are faced with a problem of multicollinearity.

If the degree of correlation between variables is high enough, it can cause. The multicollinearity is the term is related to numerical variables. The variables are independent and are found to be correlated in some regard. For example, body surface area (bsa) and weight are strongly correlated (r = 0.875), and weight and pulse are.

It will provide an apparent idea for the presence of multicollinearity.

In the r custom function below, we are removing the variables with the largest vif until all variables have vif less than 2.5. The code to do that is shown below: Perfect (or exact) multicollinearity if two or more independent variables have an exact linear relationship between them then Multicollinearity have been based on judging the magnitudes of various diagnostic measures.

Suppose you want to remove multicollinearity problem in your regression model with r.