The primary concern is that as the degree of multicollinearity increases, the coefficient estimates become unstable and the standard errors for the coefficients can get wildly inflated. 2 an example in spss: Here is an example of perfect multicollinearity in a model with two explanatory variables:

Learn to Test for Multicollinearity in SPSS With Data From

(assume predictors are 8) testing for multicollinearity of predictor 1 (repeat this for all predictors):

This means that essentially x.

For example, if you square term x to model curvature, clearly there is a correlation between x and x 2. Similarities between the independent variables will result in a very strong correlation. See the image for an example output of spss (simulated data, two predictors). Resolving multicollinearity with stepwise regression.

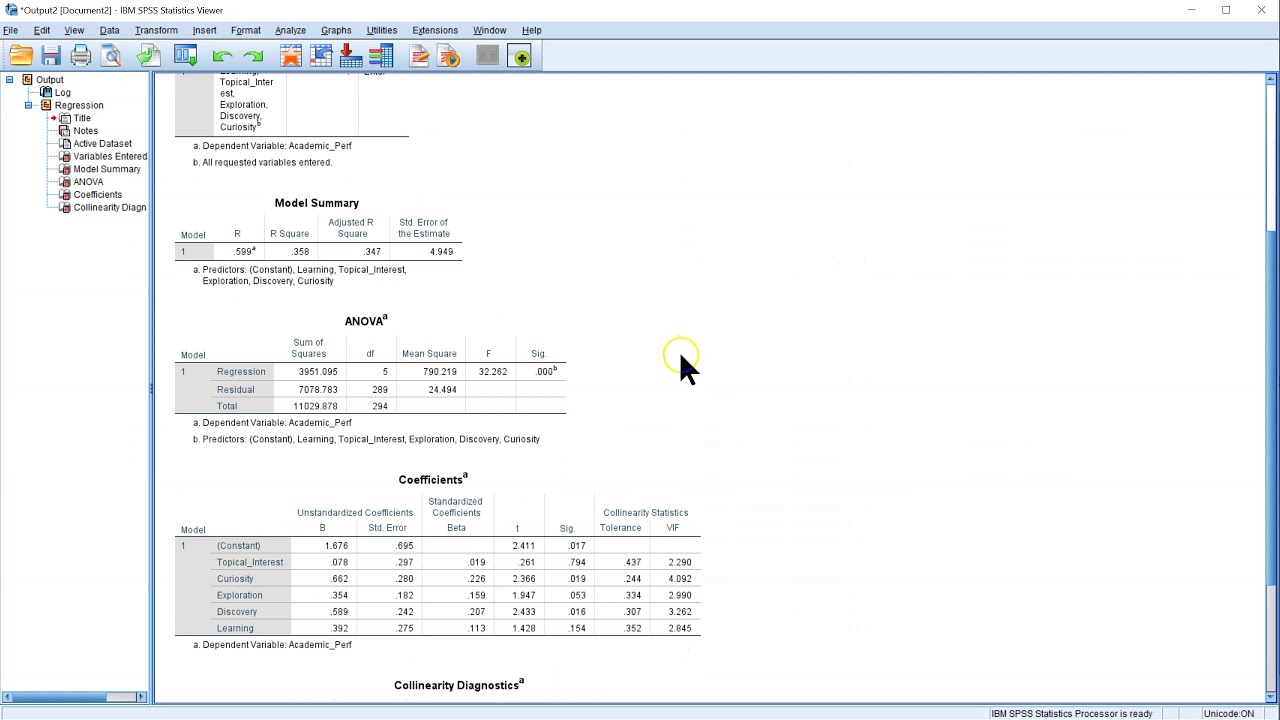

This example demonstrates how to test for multicollinearity specifically in multiple linear regression.

Several eigenvalues are close to 0, indicating that the predictors are highly intercorrelated and that small changes in the data values may lead to large changes in the estimates of the coefficients. Multicollinearity test example using spss| after the normality of the data in the regression model are met, the next step to determine whether there is similarity between the independent variables in a model it is necessary to multicollinearity test. How to test for multicollinearity in spss logistic regression. Now we run a multiple regression analysis using spss.

Two control variables are average sat scores and average act scores for.

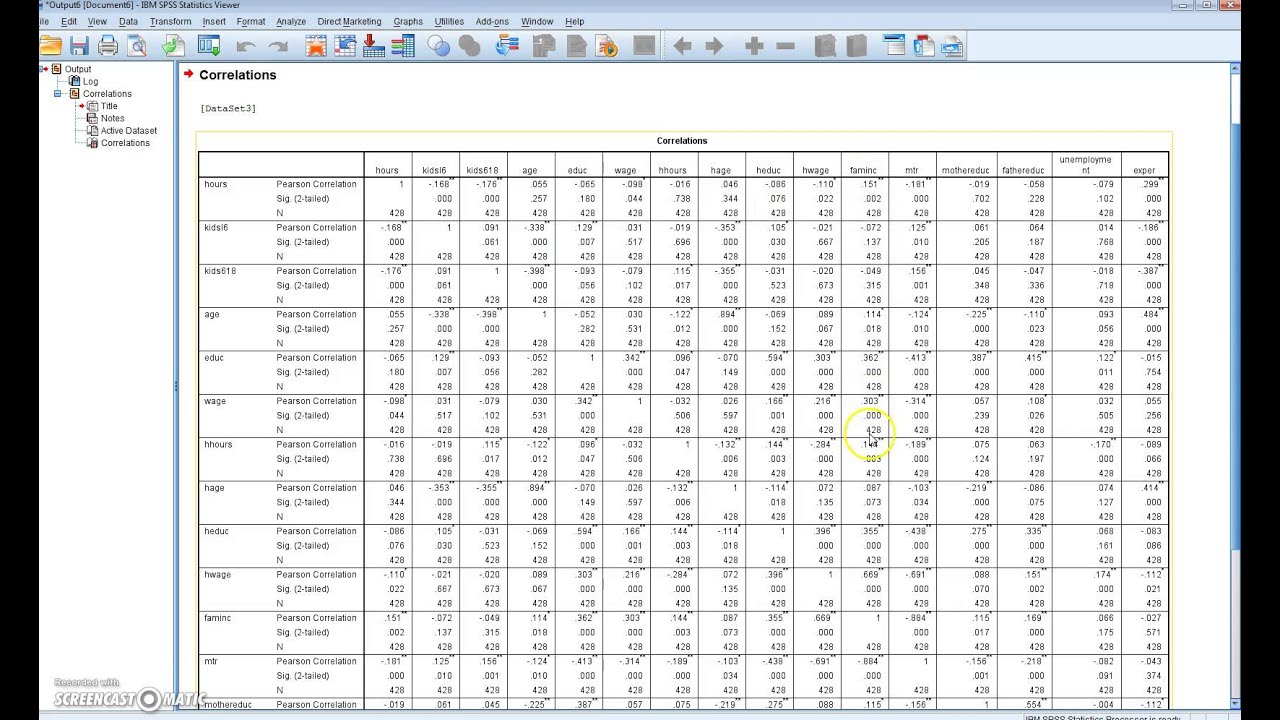

My hypothesis was, among other things, that information seeking does not differ between age groups (i.e., no main effect). Ü l ú 4 e ú 5 : A person’s height and weight, age and sales price of a car, or years of education and annual income. In this section, we will explore some spss commands that help to detect multicollinearity.

Very easily you can examine the correlation matrix.

This type of multicollinearity is present in the data itself rather than being an artifact of our model. Colleges, the dependent variable is graduation rate, and the variable of interest is an indicator (dummy) for public vs. Suppose we want to use “height in centimeters” and “height in meters” to predict the weight of a certain species of dolphin. The sample consists of u.s.

For each of these predictor examples, the researcher just observes the values as they occur for the people in the random sample.

The collinearity diagnostics confirm that there are serious problems with multicollinearity. There are three diagnostics that we can run on spss to identify multicollinearity: Examples of correlated predictor variables (also called multicollinear predictors) are: The following examples show the three most common scenarios of perfect multicollinearity in practice.

A fitness goods manufacturer has created a new product and has done a market test of it in four select markets.

Spss then inspects which of these predictors really contribute to predicting our dependent variable and excludes those who don't. Multicollinearity in spss suppose we have the following dataset that shows the exam score of 10 students along with the number of hours they spent studying, the number of prep exams they took, and their current grade in the course: What i would like to know is how these eigenvalues are calculated. • the presence of multicollinearity can cause serious problems with the estimation of β and the interpretation.

We specify which predictors we'd like to include.

In other words, it’s a byproduct of the model that we specify rather than being present in the data itself. Including the same information twice (weight in pounds and weight in kilograms), not using dummy variables correctly (falling into the dummy variable trap), etc. We obtain the following results: Multicollinearity happens more often than not in such observational studies.

Here’s an example from some of my own work:

Predictor 1 = b0 + b2*predictor2 +. One predictor variable is a multiple of another. 1) fit the regression model using the predictor as the outcome. Obvious examples include a person's gender, race, grade point average, math sat score, iq, and starting salary.

A method that almost always resolves multicollinearity is stepwise regression.

Examples of multicollinearity example #1 let’s assume that abc ltd, a kpo, has been hired by a pharmaceutical company to provide research services and. There are 2 ways in checking for multicollinearity in spss and that is through tolerance and vif. 5 ü e ú 6 : As a measure of multicollinearity, some statistical packages, like spss and sas, give you eigenvalues.

This tutorial explains how to use vif to detect multicollinearity in a regression analysis in spss.

Systolic blood pressure, weight, and age this example uses a subset of data derived from the 2002 english health survey (teaching dataset). Computing the variance inflation factor (henceforth vif) and the tolerance statistic. Multicollinearity generally occurs when there are high correlations between two or more predictor variables. • multicollinearity inflates the variances of the parameter estimates and hence this may lead to lack of statistical significance of individual predictor variables even though the overall model may be significant.