In other words, it’s a byproduct of the model that we specify rather than being present in the data itself. Multicollinearity does not reduce the. Think of when we have a data set and we decide to use log to either scale all features or normalize them.

Multicollinearity [ChartSchool]

The examples for multicollinear predictors would be the sales price and age of a car, the weight, height of a person, or annual income and years of education.

— the following blood pressure data on 20 individuals with high blood pressure:

This example demonstrates how to test for multicollinearity specifically in multiple linear regression. Weight (in pounds), height (in inches), and bmi (body mass index). In certain software packages, they provide a measure for the same, known as the vif, and a vif >5 suggests high multicollinearity. If x1 = total loan amount, x2 = principal amount, x3 = interest amount.

High yield corporate bond index yield effective monthly yields, u.s.

The following examples show the three most common scenarios of perfect multicollinearity in practice. Some researchers observed — notice the choice of word! Some researchers observed — notice the choice of word! In a weaker model, multicollinearity can happen even with lower a vif.

Multicollinearity in regression analysis occurs when two or more predictor variables are highly correlated to each other, such that they do not provide unique or independent information in the regression model.

This indicates that there is strong multicollinearity among x1, x2 and x3. If the degree of correlation is high enough between variables, it can cause problems. Blood pressure (y = bp, in mm hg) age (\(x_{1} = age\), in years) weight (\(x_{2} = weight\), in kg) Rxixj.x1x2….xk ≠ 0 h 0:

Multicollinearity is a term used in data analytics that describes the occurrence of two exploratory variables in a linear regression model that is found to be correlated through adequate analysis and a predetermined degree of accuracy.

Treasury note yield, merrill lynch u.s. R x i x j. Examples of multicollinearity example #1 let’s assume that abc ltd, a kpo, has been hired by a pharmaceutical company to provide research services and. The offending variables may be dropped from the regression model.

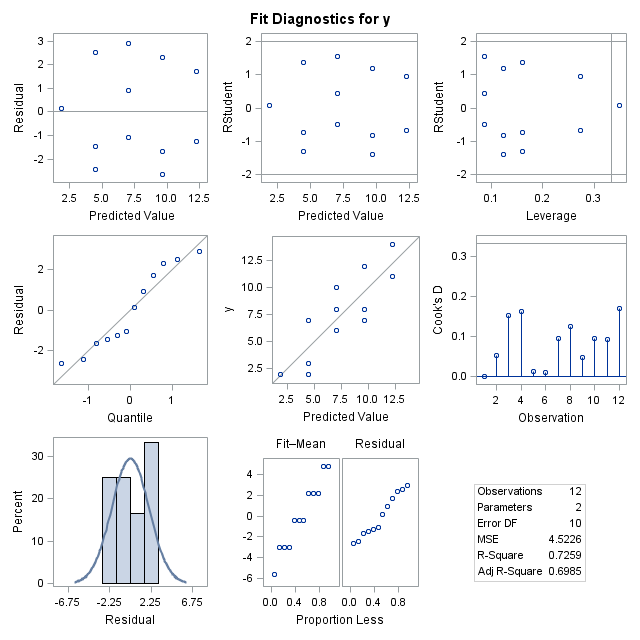

In sas, when we run proc regression we add ‘/vif tol’ in the code.

Spy) adjusted close prices arithmetic monthly returns, 1 year u.s. We can find out the value of x1 by (x2 + x3). For example, in the cloth manufacturer case, we saw that advertising and volume were correlated predictor variables, resulting in major swings in the impact of advertising when volume was and was not included in the model. 5 ü e ú 6 :

6 ü e ý ü :

Including the same information twice (weight in pounds and weight in kilograms), not using dummy variables correctly (falling into the dummy variable trap), etc. In the case of multicollinearity, the standard errors are unnaturally inflated, leading to the inability to reject the null hypothesis for the t stat. A research project, and tells how to detect multicollinearity and how to reduce it once it is found. The hypothesis to be tested at this step is as under:

Let’s take an example of loan data.

This type of multicollinearity is present in the data itself rather than being an artifact of our model. In the example above, dropping x, y and z was an obvious choice as the vif values were well above 10 indicating high multicollinearity. Calculating correlation coefficients is the easiest way to detect multicollinearity for. X k = 0 h 0:

S&p 500® index replicating etf (ticker symbol:

For example, if you square term x to model curvature, clearly there is a correlation between x and x 2. This paper is intended for any level of sas® user. In this situation, the coefficient estimates of the multiple regression may change erratically in response to small changes in the model or the data. This data set includes measurements of 252 men.

The ultimate performance of the model had an r² value of 97.7%.

R x i x j. In order to demonstrate the effects of multicollinearity and how to combat it, this paper explores the proposed techniques by using the youth risk behavior surveillance system data set. For example, if the structural multicollinearity considers that you have a data set and you use a log for normalizing or scaling the features. Multicollinearity is studied in data science.

Example of multicollinearity (with excel template)

You may find that the multicollinearity is a function of the design of the experiment. Suppose we want to use “height in centimeters” and “height in meters” to predict the weight of a certain species of dolphin. One predictor variable is a multiple of another. Blood pressure (y = bp, in mm hg) age (x 1 = age, in years) weight (x 2 = weight, in kg)

You will find this multicollinearity harder to identify and interpret.

The goal of the study was to develop a model, based on physical measurements, to predict percent body fat. This is already embedded within the feature set of your dataframe (pandas dataframe) and are much harder to observe. You can also locate that multicollinearity may be a characteristic of the making plans of the test. For illustration, we take a look at a new example, bodyfat.

We focus on a subset of the potential predictors:

2 an example in r: The second, and more dangerous in my opinion, is the data multicollinearity. Ü l ú 4 e ú 5 : The variables are independent and are found to be correlated in some regard.

Including identical (or almost identical) variables.

Here’s what our dataset might look like: In statistics, multicollinearity is a phenomenon in which one predictor variable in a multiple regression model can be linearly predicted from the others with a substantial degree of accuracy. For instance, weight in pounds and weight in kilos, or investment earnings and savings/bond earnings. The second type is data multicollinearity, which is more dangerous than structural.

For this example, the output shows multicollinearity with volume and ads, but not with price and location.

Treasury bill yield, 10 years u.s. That would be an example of structural multicollinearity. Our independent variable (x1) is not exactly independent. — the following data (bloodpress.txt) on 20 individuals with high blood pressure:

Rxixj.x1x2….xk = 0 h 0:

For the statistical model involving more than three explanatory variables, we can develop similar formula for partial correlation coefficients.